What Is Recursive? A Comprehensive Explanation

Recursion is a concept that lies at the heart of many algorithms and data structures used in computer science and programming. Understanding recursion is essential for any aspiring programmer and can greatly enhance problem-solving abilities and efficiency. In this comprehensive explanation, we will delve into the depths of recursion, exploring its definition, history, types, role in programming, advantages, and disadvantages, as well as the design and analysis of recursive algorithms. By the end, you will have a firm grasp of this powerful yet sometimes elusive concept.

Understanding the Concept of Recursion

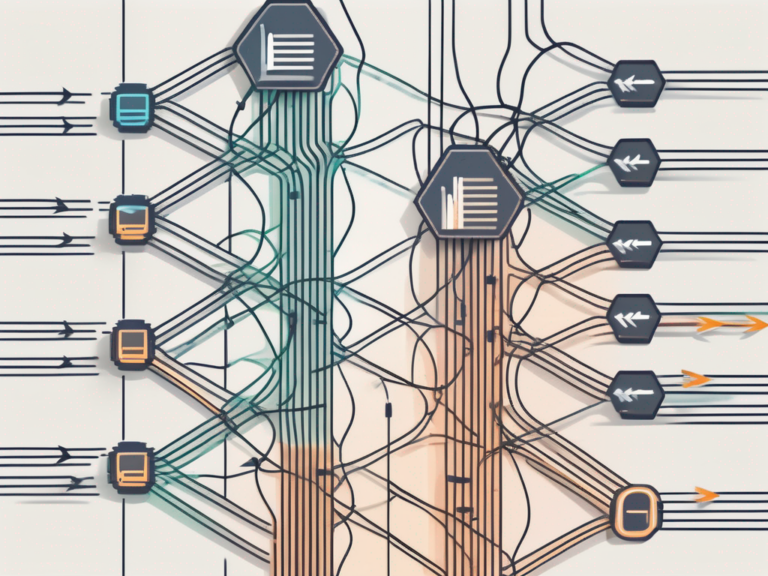

Recursion, in its simplest form, refers to the process of solving a complex problem by breaking it down into smaller instances of the same problem. It involves solving a problem by calling a function or method within itself. By repeatedly applying the same logic to a smaller and smaller version of the problem, recursion allows us to reach a base case where the problem becomes trivial to solve.

Definition of Recursion

Recursion is a programming technique where a function calls itself to solve a subproblem of the original problem, eventually leading to the solution of the whole problem. It involves breaking down a problem into smaller, simpler subproblems until a base case is reached, which represents the simplest form of the problem.

The History and Evolution of Recursion

Recursion has a rich history that dates back to the early days of computer science. The concept was first introduced by mathematician and logician Kurt Gödel in the 1930s as a way to define computability. Since then, recursion has become a fundamental concept in various fields, including mathematics, computer science, and programming.

One of the earliest applications of recursion can be found in the field of mathematics, where it was used to solve problems in number theory and combinatorics. Mathematicians realized that by breaking down complex mathematical problems into simpler subproblems, they could arrive at elegant solutions. This approach revolutionized the field and opened up new avenues for mathematical exploration.

In computer science, recursion found its place as a powerful problem-solving technique. The ability to break down complex problems into smaller, more manageable subproblems made it possible to tackle a wide range of challenges. As computer technology advanced, recursion became an integral part of programming languages and algorithms, enabling the development of sophisticated software systems.

The Fundamental Principles of Recursion

Recursion is a powerful concept in computer science that allows algorithms to solve complex problems by breaking them down into smaller, more manageable subproblems. It is built upon two fundamental principles: the base case and the recursive case. These principles form the foundation upon which all recursive algorithms are constructed.

Base Case in Recursion

The base case is a critical component of any recursive algorithm. It represents the simplest version of the problem that can be solved directly without further recursion. It acts as the terminating condition for the recursive calls and allows the algorithm to stop recurring when the problem has been broken down to its simplest form.

Imagine you are trying to calculate the factorial of a number using recursion. The base case would be when the number is 0 or 1, as the factorial of 0 or 1 is always 1. Without a base case, the recursion would continue indefinitely, leading to a stack overflow or an infinite loop.

Recursive Case in Recursion

The recursive case is where the magic happens in recursive algorithms. It defines how the problem is broken down into smaller subproblems and how the solution to the original problem is obtained by combining the solutions to the subproblems.

Continuing with the factorial example, the recursive case would involve calling the factorial function with a modified version of the problem. For example, to calculate the factorial of 5, the function would call itself with the factorial of 4, then the factorial of 3, and so on, gradually reducing the size of the problem until it reaches the base case.

Understanding the recursive case is crucial for designing efficient and elegant recursive algorithms. It requires careful consideration of how the problem can be divided into smaller, more manageable subproblems, and how the solutions to these subproblems can be combined to obtain the final solution.

Different Types of Recursion

Recursion can take on various forms, each with its unique characteristics and use cases. Understanding the different types of recursion can be helpful in selecting the most appropriate approach for solving a particular problem.

Tail Recursion

Tail recursion occurs when the recursive call is the last operation performed in a function. In tail-recursive functions, the recursive call is optimized by replacing the current stack frame with the next, eliminating the need for additional memory allocation for recursive calls. This optimization technique is known as tail call optimization and can help prevent stack overflow errors.

Consider a scenario where you need to calculate the factorial of a number using recursion. A tail-recursive approach would involve passing an accumulator variable to the recursive function. With each recursive call, the accumulator is updated, and the result is obtained when the base case is reached. This approach ensures that the recursive call is the last operation performed, optimizing the memory usage and improving the efficiency of the algorithm.

Head Recursion

Head recursion, also known as forward recursion, is the opposite of tail recursion. In head-recursive functions, the recursive call is performed before any other operations. This means that the recursive call is made, and then the function performs its computations, potentially resulting in a deeper recursion stack.

An example of head recursion can be seen in traversing a linked list. When traversing a linked list using recursion, a head-recursive approach involves making the recursive call first to the next node and then performing any necessary operations on the current node. This approach can be useful in certain scenarios where the order of operations is important or when you need to process the elements in a specific sequence.

Mutual Recursion

Mutual recursion occurs when two or more functions call each other in a cycle. These functions work together to solve a problem by continually invoking one another. Each function’s execution depends on the results of the other functions, ultimately leading to the solution of the problem. Mutual recursion can be an effective approach for solving certain types of problems.

Consider a scenario where you need to simulate a game of rock-paper-scissors using recursion. You can implement mutual recursion by creating two functions: one for the player’s move and another for the computer’s move. The player’s move function calls the computer’s move function to determine the computer’s move, and vice versa. This back-and-forth interaction between the functions creates a dynamic and engaging game experience.

Understanding the different types of recursion opens up a world of possibilities when it comes to problem-solving. By choosing the appropriate type of recursion for a given problem, you can optimize memory usage, improve efficiency, and create elegant and effective solutions.

The Role of Recursion in Programming

Recursion plays a crucial role in both computer science and software development. Its unique properties make it a powerful tool for solving a wide range of problems efficiently and elegantly.

Recursion in Computer Science

In computer science, recursion is extensively used for solving problems that can be naturally divided into smaller instances. It enables the creation of algorithms that simplify the problem-solving process by breaking down complex problems into smaller, more manageable subproblems.

For example, consider the problem of calculating the factorial of a number. Instead of using a loop to multiply each number from 1 to the given number, recursion allows us to define the factorial of a number as the product of that number and the factorial of the number minus one. This recursive definition makes the code concise and elegant.

Recursion is particularly useful for solving problems involving hierarchical or nested data structures, such as trees and graphs. It allows for concise and elegant code, often resulting in simpler and more maintainable solutions.

Recursion in Software Development

Recursion is also widely used in software development. It enables developers to write clean and efficient code by encapsulating repetitive tasks in recursive functions or methods. This reduces code duplication and promotes code reuse, resulting in more modular and maintainable software.

For instance, consider a scenario where you need to search for a specific element in a nested list. By using recursion, you can define a function that checks each element in the list and, if it encounters another list, calls itself to search within that sublist. This recursive approach allows for a flexible and scalable solution, as it can handle lists of any depth.

Furthermore, recursion enables the implementation of various algorithms and data structures, such as sorting algorithms (e.g., quicksort, mergesort) and tree traversals (e.g., depth-first search, breadth-first search). These algorithms often rely on recursion to solve complex problems with minimal code complexity.

For example, the quicksort algorithm uses recursion to divide the input array into smaller subarrays and recursively sort them. This recursive approach allows for efficient sorting of large datasets with a relatively simple implementation.

Advantages and Disadvantages of Using Recursion

While recursion offers many benefits, it is essential to consider its advantages and disadvantages when deciding whether to use it in a particular context.

Benefits of Recursion

Recursion offers several advantages that make it a valuable tool in problem-solving and programming:

- Simplicity: Recursion allows for more concise and readable code, simplifying complex problems by breaking them down into smaller, more manageable subproblems.

- Elegance: Recursive solutions often exhibit elegant and intuitive properties, making them easier to understand and reason about.

- Modularity: Recursive functions promote modular code design, enabling code reuse and reducing redundancy.

- Efficiency: In certain cases, recursion can lead to more efficient solutions compared to iterative approaches, particularly when dealing with hierarchical data structures.

Potential Drawbacks of Recursion

Despite its advantages, recursion also has several potential drawbacks:

- Stack Overflow: Recursive algorithms may lead to stack overflow errors if the recursion depth becomes too large or if the base case is not properly defined.

- Performance Overhead: Recursive functions generally have more memory and time overhead compared to their iterative counterparts due to the additional stack frames created for each recursive call.

- Complexity: Recursive solutions can be challenging to design, debug, and analyze, especially for more complex problems.

- Space Complexity: Recursive algorithms may require more memory compared to iterative algorithms, as each recursive call adds a new stack frame to the call stack.

Let’s delve deeper into the potential drawbacks of recursion. One of the main concerns is the possibility of encountering a stack overflow error. This occurs when the recursion depth becomes too large, surpassing the system’s stack size limit. It is crucial to define a proper base case to ensure that the recursion terminates before reaching this limit.

In addition to stack overflow errors, recursive functions also tend to have more memory and time overhead compared to their iterative counterparts. This is because each recursive call creates a new stack frame, which consumes additional memory. Furthermore, the process of pushing and popping stack frames for each recursive call adds extra computational time.

While recursion offers simplicity and elegance, it can also introduce complexity, especially when dealing with more intricate problems. Designing and debugging recursive solutions may require a deeper understanding of the problem and careful consideration of the base case and recursive step. It is crucial to thoroughly analyze the problem and ensure that the recursive solution is correct and efficient.

Lastly, recursive algorithms may have higher space complexity compared to iterative algorithms. As each recursive call adds a new stack frame to the call stack, more memory is required. This can be a concern when working with large data sets or limited memory resources.

Understanding Recursive Algorithms

Recursive algorithms are algorithms that solve a problem by recursively applying a set of rules or computations to smaller instances of the problem. Understanding how to design and analyze recursive algorithms is essential for implementing efficient and correct solutions to a wide range of problems.

When designing recursive algorithms, it is crucial to carefully consider the problem’s characteristics and choose the appropriate recursive calls and computations. The design process involves identifying the base case(s), defining the recursive case(s), and determining how the solutions to the subproblems can be combined to obtain the solution to the original problem. By breaking down the problem into smaller instances and applying the same set of rules or computations, recursive algorithms can effectively tackle complex problems.

Designing Recursive Algorithms

The design of recursive algorithms requires a deep understanding of the problem at hand. It involves breaking down the problem into smaller, more manageable subproblems, which can be solved using the same algorithm. By defining the base case(s), which represent the simplest instances of the problem that can be solved directly, and the recursive case(s), which describe how to solve larger instances by breaking them down into smaller ones, the algorithm can effectively navigate through the problem space.

Choosing the appropriate recursive calls and computations is crucial for the algorithm’s efficiency and correctness. It requires careful consideration of the problem’s characteristics, such as its structure and constraints. By making informed decisions about how to divide the problem and combine the solutions to the subproblems, the algorithm can efficiently solve the original problem.

Analyzing Recursive Algorithms

Analysis of recursive algorithms is essential to ensure their efficiency and to avoid issues like stack overflow or excessive memory usage. This analysis involves understanding the time complexity, space complexity, and termination conditions of the algorithm.

Time complexity analysis of recursive algorithms often involves establishing recurrence relations, which describe the relationship between the problem size and the number of operations performed by the algorithm. By solving these recurrence relations, it is possible to estimate the algorithm’s efficiency as the problem size grows. This analysis helps identify algorithms that are efficient and scalable, as well as those that may suffer from performance issues for larger problem instances.

Space complexity analysis focuses on understanding the memory requirements of the algorithm. Recursive algorithms typically use the call stack to keep track of the recursive calls and their corresponding variables. Analyzing the space complexity helps identify algorithms that may require excessive memory usage, potentially leading to stack overflow or other memory-related issues.

Properly designing and analyzing recursive algorithms is crucial for developing efficient and correct solutions to a wide range of problems. By carefully considering the problem’s characteristics, choosing appropriate recursive calls and computations, and analyzing the algorithm’s time and space complexity, it is possible to create algorithms that effectively solve complex problems while avoiding common pitfalls.

Frequently Asked Questions About Recursion

Here are answers to some common questions and misconceptions about recursion:

Common Misconceptions About Recursion

Recursion can be a challenging concept to grasp, and it’s not uncommon for misconceptions to arise. Let’s address some of the most common misunderstandings:

- Recursion is the same as iteration: While recursion and iteration both involve repetitive execution, they are distinct concepts. Recursion involves the repeated calling of a function within itself, while iteration involves looping through a set of instructions.

- Recursion is always more efficient than iteration: While recursion can offer more concise and elegant solutions, it is not always more efficient than iteration. Recursive algorithms can suffer from stack overflow and have more memory and time overhead.

- Recursion is limited to mathematical problems: Recursion is a versatile concept that extends well beyond mathematics. It can be applied to various problem domains, including software development, data structures, and algorithms.

Tips for Mastering Recursion

Mastering recursion requires practice and understanding. Here are some tips to help you become proficient in recursion:

- Start with simpler problems and gradually tackle more complex ones.

- Identify the base case(s) and the recursive case(s) of a problem.

- Visualize the problem and its recursive structure using diagrams or pseudocode.

- Test your recursive algorithms with small input sizes and check the results against a known solution.

- Study and analyze existing recursive algorithms to gain insights into their design and efficiency.

In conclusion, recursion is a fundamental concept in computer science and programming. By breaking down complex problems into smaller, more manageable subproblems, recursion allows for elegant and efficient solutions. Understanding the principles, types, advantages, and disadvantages of recursion, as well as the design and analysis of recursive algorithms, is key to becoming proficient in problem-solving and programming. With practice and perseverance, you can harness the power of recursion to tackle a wide range of challenges.